Spark Read Avro

Spark Read Avro - Web getting following error: The specified schema must match the read. Web read apache avro data into a spark dataframe. Web pyspark.sql.avro.functions.from_avro (data, jsonformatschema, options = {}) [source] ¶ converts a binary column of avro format into its corresponding catalyst value. Apache avro is a commonly used data serialization system in the streaming world. A container file, to store persistent data. But we can read/parsing avro message by writing. Notice this functionality requires the spark connection sc to be instantiated with either an explicitly specified spark version (i.e., spark_connect (., version = , packages = c (avro, ),.)) or a specific version of spark avro package to use (e.g., spark…</p> A typical solution is to put data in avro format in apache kafka, metadata in. Apache avro introduction apache avro advantages spark avro.

Apache avro introduction apache avro advantages spark avro. But we can read/parsing avro message by writing. Please note that module is not bundled with standard spark. The specified schema must match the read. Web avro data source for spark supports reading and writing of avro data from spark sql. 0 as like you mentioned , reading avro message from kafka and parsing through pyspark, don't have direct libraries for the same. Todf ( year , month , title , rating ) df. Notice this functionality requires the spark connection sc to be instantiated with either an explicitly specified spark version (i.e., spark_connect (., version = , packages = c (avro, ),.)) or a specific version of spark avro package to use (e.g., spark…</p> Web july 18, 2023 apache avro is a data serialization system. A typical solution is to put data in avro format in apache kafka, metadata in.

A container file, to store persistent data. Web avro data source for spark supports reading and writing of avro data from spark sql. Apache avro is a commonly used data serialization system in the streaming world. 0 as like you mentioned , reading avro message from kafka and parsing through pyspark, don't have direct libraries for the same. Web read and write streaming avro data. Code generation is not required to read. Df = spark.read.format (avro).load (examples/src/main/resources/users.avro) df.select (name, favorite_color).write.format (avro).save (namesandfavcolors.avro) however, i need to read streamed avro. [ null, string ] tried to manually create a. Web getting following error: Todf ( year , month , title , rating ) df.

Avro Lancaster spark plugs How Many ? Key Aero

Web read and write streaming avro data. Partitionby ( year , month ). This library allows developers to easily read. Web pyspark.sql.avro.functions.from_avro (data, jsonformatschema, options = {}) [source] ¶ converts a binary column of avro format into its corresponding catalyst value. Please deploy the application as per the deployment section of apache avro.

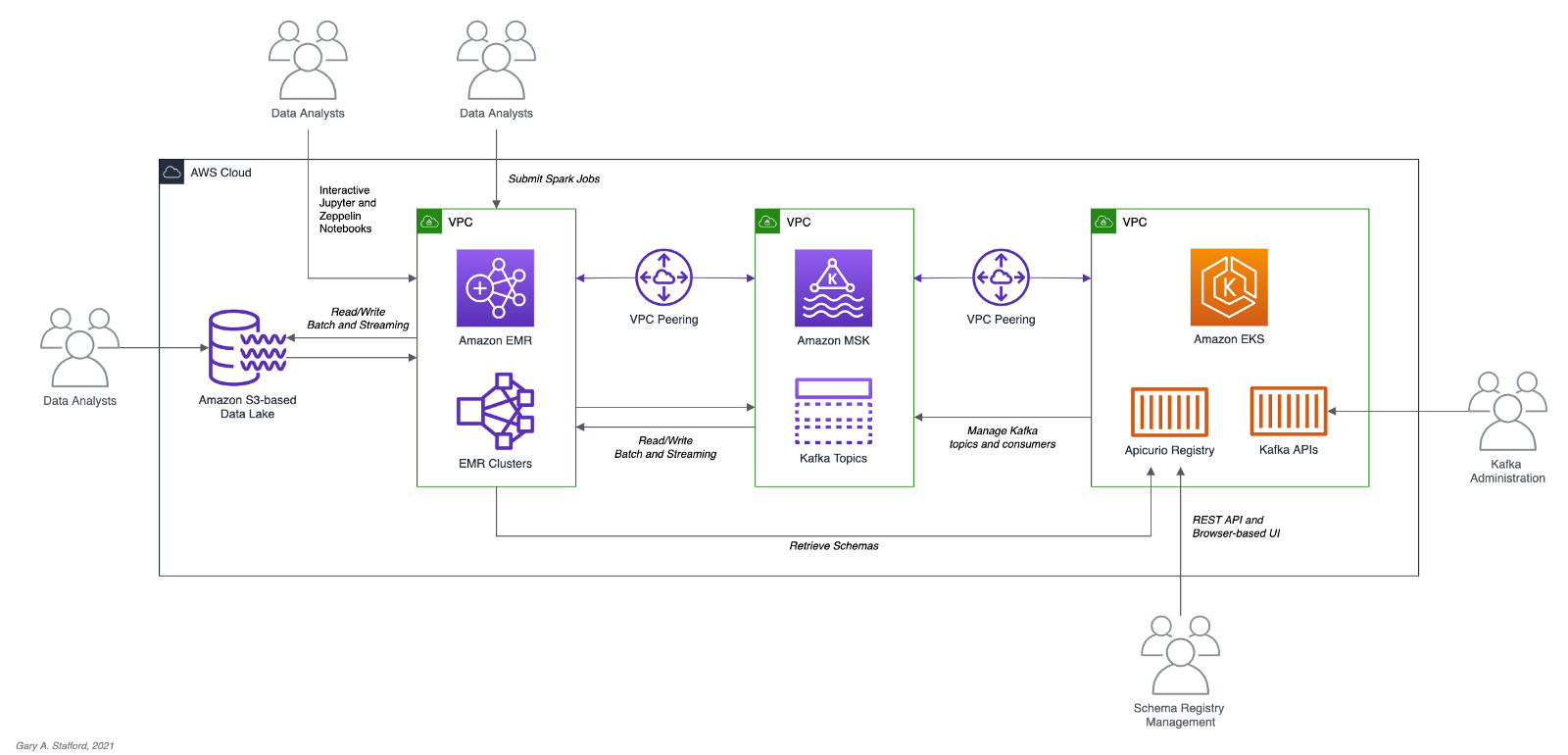

Stream Processing with Apache Spark, Kafka, Avro, and Apicurio Registry

Simple integration with dynamic languages. Web pyspark.sql.avro.functions.from_avro (data, jsonformatschema, options = {}) [source] ¶ converts a binary column of avro format into its corresponding catalyst value. Code generation is not required to read. Please note that module is not bundled with standard spark. A container file, to store persistent data.

Requiring .avro extension in Spark 2.0+ · Issue 203 · databricks/spark

Todf ( year , month , title , rating ) df. Web read apache avro data into a spark dataframe. Web viewed 9k times. This library allows developers to easily read. Val df = spark.read.avro (file) running into avro schema cannot be converted to a spark sql structtype:

Avro Reader Python? Top 11 Best Answers

The specified schema must match the read. Web read apache avro data into a spark dataframe. 0 as like you mentioned , reading avro message from kafka and parsing through pyspark, don't have direct libraries for the same. Partitionby ( year , month ). Notice this functionality requires the spark connection sc to be instantiated with either an explicitly specified.

Spark Convert JSON to Avro, CSV & Parquet Spark by {Examples}

[ null, string ] tried to manually create a. Code generation is not required to read. Web pyspark.sql.avro.functions.from_avro (data, jsonformatschema, options = {}) [source] ¶ converts a binary column of avro format into its corresponding catalyst value. A container file, to store persistent data. Web july 18, 2023 apache avro is a data serialization system.

Spark Convert Avro file to CSV Spark by {Examples}

[ null, string ] tried to manually create a. Please note that module is not bundled with standard spark. But we can read/parsing avro message by writing. This library allows developers to easily read. Please deploy the application as per the deployment section of apache avro.

Spark Read Files from HDFS (TXT, CSV, AVRO, PARQUET, JSON) bigdata

Web 1 answer sorted by: Please deploy the application as per the deployment section of apache avro. Apache avro is a commonly used data serialization system in the streaming world. Trying to read an avro file. Web avro data source for spark supports reading and writing of avro data from spark sql.

GitHub SudipPandit/SparkCSVJSONORCPARQUETAVROreadandwrite

Trying to read an avro file. Notice this functionality requires the spark connection sc to be instantiated with either an explicitly specified spark version (i.e., spark_connect (., version = , packages = c (avro, ),.) ) or a specific version of spark avro.</p> Df = spark.read.format (avro).load (examples/src/main/resources/users.avro) df.select (name, favorite_color).write.format (avro).save (namesandfavcolors.avro) however, i need to read streamed avro..

Apache Spark 2.4 内置的 Avro 数据源介绍 过往记忆

Notice this functionality requires the spark connection sc to be instantiated with either an explicitly specified spark version (i.e., spark_connect (., version = , packages = c (avro, ),.) ) or a specific version of spark avro.</p> Simple integration with dynamic languages. Please note that module is not bundled with standard spark. Web july 18, 2023 apache avro is a.

Spark Azure DataBricks Read Avro file with Date Range by Sajith

Apache avro introduction apache avro advantages spark avro. A compact, fast, binary data format. Web getting following error: Val df = spark.read.avro (file) running into avro schema cannot be converted to a spark sql structtype: Todf ( year , month , title , rating ) df.

Notice This Functionality Requires The Spark Connection Sc To Be Instantiated With Either An Explicitly Specified Spark Version (I.e., Spark_Connect (., Version = , Packages = C (Avro, ),.) ) Or A Specific Version Of Spark Avro.</P>

The specified schema must match the read. Web read and write streaming avro data. Please deploy the application as per the deployment section of apache avro. If you are using spark 2.3 or older then please use this url.

A Compact, Fast, Binary Data Format.

Read apache avro data into a spark dataframe. Please note that module is not bundled with standard spark. [ null, string ] tried to manually create a. But we can read/parsing avro message by writing.

Web July 18, 2023 Apache Avro Is A Data Serialization System.

Apache avro is a commonly used data serialization system in the streaming world. Trying to read an avro file. 0 as like you mentioned , reading avro message from kafka and parsing through pyspark, don't have direct libraries for the same. Code generation is not required to read.

Web 1 Answer Sorted By:

A container file, to store persistent data. Web read apache avro data into a spark dataframe. A typical solution is to put data in avro format in apache kafka, metadata in. Failed to find data source: