Read Parquet File Pyspark

Read Parquet File Pyspark - Optionalprimitivetype) → dataframe [source] ¶. I wrote the following codes. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Web i use the following two ways to read the parquet file: Spark sql provides support for both reading and. Web pyspark read parquet file into dataframe. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Parquet is a columnar format that is supported by many other data processing systems. Web i want to read a parquet file with pyspark. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc).

Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Web i use the following two ways to read the parquet file: Parquet is a columnar format that is supported by many other data processing systems. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Optionalprimitivetype) → dataframe [source] ¶. Web pyspark read parquet file into dataframe. I wrote the following codes. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Spark sql provides support for both reading and. Web i want to read a parquet file with pyspark.

Parquet is a columnar format that is supported by many other data processing systems. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Web pyspark read parquet file into dataframe. I wrote the following codes. Spark sql provides support for both reading and. Web i use the following two ways to read the parquet file: Web i want to read a parquet file with pyspark. Optionalprimitivetype) → dataframe [source] ¶. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc).

How To Read A Parquet File Using Pyspark Vrogue

From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Spark sql provides support for both reading and. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Web i want to read a parquet file with pyspark. Parquet is a columnar format that is supported by many other data processing systems.

How to read a Parquet file using PySpark

Spark sql provides support for both reading and. Web i use the following two ways to read the parquet file: From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Optionalprimitivetype) → dataframe [source] ¶.

How to resolve Parquet File issue

Web i want to read a parquet file with pyspark. Optionalprimitivetype) → dataframe [source] ¶. Parquet is a columnar format that is supported by many other data processing systems. I wrote the following codes. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \.

PySpark Read and Write Parquet File Spark by {Examples}

Optionalprimitivetype) → dataframe [source] ¶. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Parquet is a columnar format that is supported by many other data processing systems. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Web i use the following two ways to read the parquet file:

pd.read_parquet Read Parquet Files in Pandas • datagy

Web i want to read a parquet file with pyspark. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Optionalprimitivetype) → dataframe [source] ¶.

How To Read Various File Formats In Pyspark Json Parquet Orc Avro Www

From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Optionalprimitivetype) → dataframe [source] ¶. Web i want to read a parquet file with pyspark. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Parquet is a columnar format that is supported by many other data processing systems.

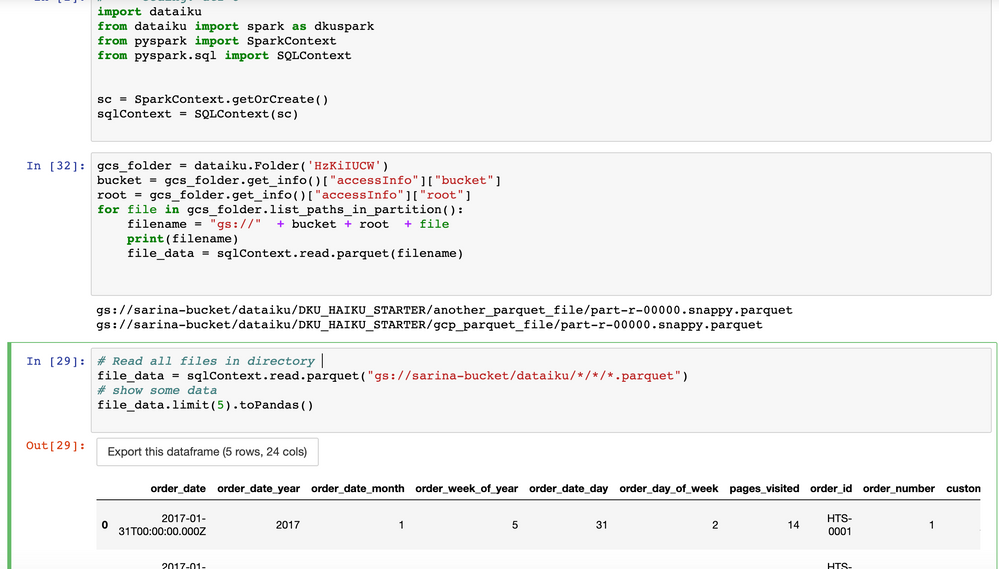

Solved How to read parquet file from GCS using pyspark? Dataiku

From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Spark sql provides support for both reading and. Parquet is a columnar format that is supported by many other data processing systems. Optionalprimitivetype) → dataframe [source] ¶.

Python How To Load A Parquet File Into A Hive Table Using Spark Riset

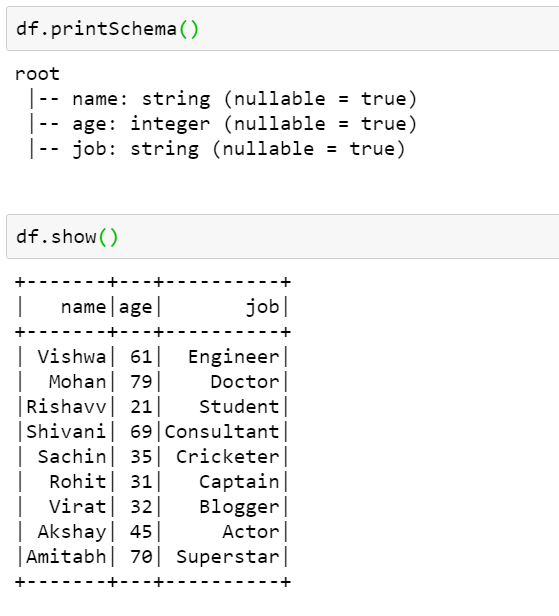

Web pyspark read parquet file into dataframe. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Parquet is a columnar format that is supported by many other data processing systems. Optionalprimitivetype) → dataframe [source] ¶. Spark sql provides support for both reading and.

PySpark Tutorial 9 PySpark Read Parquet File PySpark with Python

Web pyspark read parquet file into dataframe. Web i use the following two ways to read the parquet file: Spark sql provides support for both reading and. I wrote the following codes. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc).

Read Parquet File In Pyspark Dataframe news room

Spark sql provides support for both reading and. Parquet is a columnar format that is supported by many other data processing systems. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Optionalprimitivetype) → dataframe [source] ¶. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc).

Parquet Is A Columnar Format That Is Supported By Many Other Data Processing Systems.

Web i want to read a parquet file with pyspark. Optionalprimitivetype) → dataframe [source] ¶. Web i use the following two ways to read the parquet file: Spark sql provides support for both reading and.

From Pyspark.sql Import Sparksession Spark = Sparksession.builder \.Master('Local') \.

Web pyspark read parquet file into dataframe. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. I wrote the following codes.